Framework

-

A new workflow driven by Prompt

Framework ·

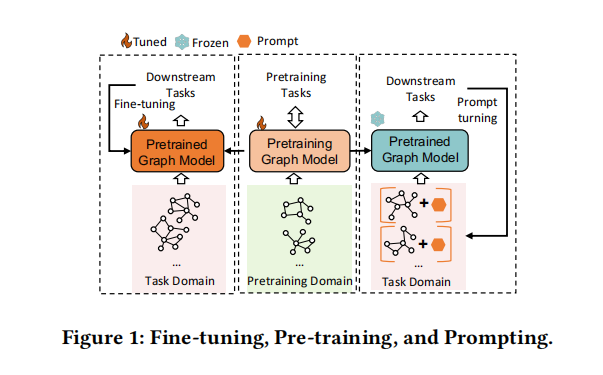

“pre-training and fine-tuning” has been adopted as a standard workflow for many graph tasks since it can take general graph knowledge to relieve the lack of graph annotations from each application. However, graph tasks with node level, edge level, and graph level are far diversified, making the pre-training pretext often incompatible with these multiple...

Method I

-

Reformulating Downstream Tasks

Method I ·

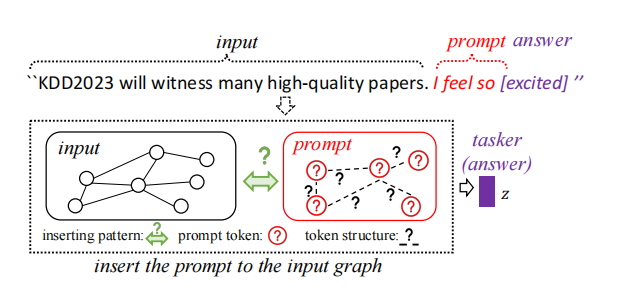

To further narrow the gap between various graph tasks and state-of-the-art pre-training strategies, we further study the task space of various graph applications and reformulate downstream problems to the graph-level task.

Method II

-

Prompt Graph Design

Method II ·

We first unify the format of graph prompts and language prompts with the prompt token, token structure, and inserting pattern. In this way, the prompting idea from NLP can be seamlessly introduced to the graph area.

Method III

-

Applying Meta-learning

Method III ·

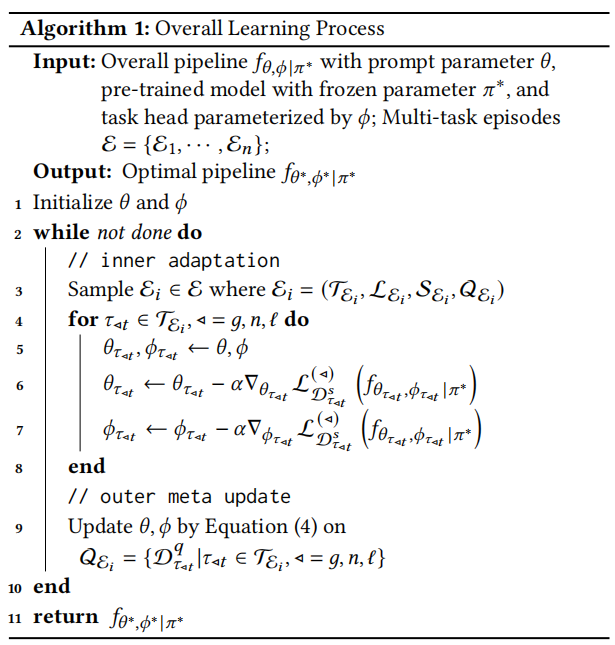

We introduce a meta-learning technique to learn more adaptive graph prompts for multi-task settings. Meta-learning is applied over multiple tasks to learn better prompts. This process involves constructing meta prompting tasks and updating the parameters of the tasks using a gradient descent method.